I'm happy to share few links to posts related to DataObjects.Net:

"DataObjects.Net - ORM Framework for RAD - Introduction" by Thomas Maierhofer - the intro is really good. Thomas, thanks a lot helping us to promote the product!

I feel Paul made this post a bit early - the application isn't finished yet, so he shared his intermediate experience. I'm still helping Paul to fight with some issues, but AFAIK, all of them are minor now. There were pretty complex challenges for us - e.g. appearance of SystemOperationLog mode and few really complex bugs related to atomicity of state changes are direct results of interaction with Paul. On the other hand, they're resolved now, and this is a benefit for all of us.

Paul, thanks a lot! Both for writing about us and for some really tricky issue reports that helped us to make the product better.

I'd like to share an early link to feature-based ORM comparison we're working on: "The Most Comprehensive Feature-Based Object-Relational Mapping Tool Comparison Ever :)™"

The document is incomplete yet:

- some cells are empty - i.e. their content is currently unknown;

- there can be some mistakes (it wasn't checked by community yet);

as you might suspect, a copy of this document is edited by ORMBattle.net participants, so its version including most of the tools tested there must also appear soon. It won't appear "as-is" at our own web site, but we'll use ~ the same columns from it (direct comparison with commercial competitors in marketing materials is normally not acceptable).

On the other hand, the feature map is already quite comprehensive: there are about 270 features organized into hierarchical structure. It is far more detailed then any other ORM comparison we were able to find (likely, this one is the most detailed, but really ancient predecessor).

Likely, the document is currently a bit biased toward DataObjects.Net from the point of selected features, but I feel this will be "automatically fixed" by the community shortly: vendors are allowed to add any non-duplicating features and sections there, as well as propose to exclude the non-important ones.

On the other hand, it's clearly much less biased document as e.g. this one (although I understand it doesn't pretend to be a real comparison). I.e. it can be hardly called as promotional material.

Our final goal is to develop a feature map including major ORM tools and features that are mutually agreed by various ORM vendors, where each vendor is responsible for contents of his own column (i.e. cheating is possible, but I suspect users & competitors won't accept this well); the table you see is our initial investment into this process.

Availability of such comparison should help developers to choose the tools they need based on their own requirements, as well as understand the relationships between features better (hierarchy seems really helpful here - I already got few quite positive comments related to the structure of the document).

An accompanying document commenting each section there and describing DataObjects.Net advantages / disadvantages in comparison to other tools should also appear soon.

Don't forget to study the comments at the bottom of the first sheet, as well as "Remarks" sheet.

I just finished writing pretty large document: "Atomicity of visible state change in complex action sequences in DataObjects.Net"

The article describes one of important features of event notification system in DataObjects.Net, that is used in during synchronization of paired (inverse) associations and entity removals.

Any comments, error reports and suggestions are welcome. Russian-speaking developers can read the article in Russian.

The article (in IFrame):

This is a minor update fixing the issues we've found earlier. Few most serious bugs were fixed in DisconnectedState:

- New system operation logger could skip logging the operations invoked from events raised by higher level operation.

- EntitySet<T> could loose newly added item after refreshing its state with attached DisconnectedState (although it was persisted to the database).

P.S. You're asking us, why new DataObjects.Net installers are ~ 2 times larger then before. That's because we've added new .mshc help file (DataObjects.Net reference in VS2010 help format) - in fact, a 70 MB .zip archive. So totally, various help uses the most part of installer package (30 MB for .Chm, 70 MB for .mshc, 7 MB for .pdf). Compressed DataObjects.Net binaries require about 10 MB. We're thinking about shipping help as separate installation package.

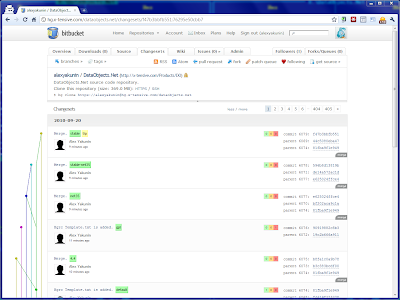

I'm glad to announce we've finally completed migration of our primary source repository to BitBucket.org:

The repository URL is http://hg.x-tensive.com/dataobjects.net (use HTTPS for checkouts).

The repository is private, i.e. you can access it only if you have a permission. If you're owner of Ultimate Edition license (or have any other agreement with our company enabling you to access the repository), please register at BitBucket.org and send us e-mail with the name of your BitBucket account to get the permission.

Note that currently you won't be able to build DataObjects.Net from the source code stored there - you need a license to proprietary .NET Reactor and PostSharp to do this (i.e. more precisely, you can build it, but you need these two additional licenses). But it's quite likely we'll eliminate the need to have at least .NET Reactor license further (i.e. it will be possible to build unprotected builds solely for debug purposes).

Yesterday we have published DataObjects.Net v.4.3.5 build 6015.

What's new:

This build includes noticeably better DataObjects.Net reference:

- Help files are generated with the newest Sandcastle. The team behind it has fixed lots of issues after its previous release, so new help looks really better. Especially, lists of available extension methods - they're displayed properly now.

- We've added about 200 new class summaries and member descriptions, so help looks almost complete from this point now. There are just few non-essential gaps (e.g. some types from SQL DOM aren't documented).

- Finally, new help can be integrated into Visual Studio 2010 Documentation, so F1 will work everywhere. Choosing this option during installation is all you need to activate it.

Unfortunately, Manual wasn't updated yet, but this is the next logical step for us.

All Session-level events were moved from Session itself to Session.Events member. So Session is much simpler now, especially from the point of IntelliSense. Actually, that was not the only reason to do this: you may find there is Session.SystemEvents member (also of SessionEventAccessor type). The rule is:

- System events are raised always - i.e. even when all custom logic is disabled (DirectSessionAccessor.OpenSystemLogicOnlyRegion does this). The typical case when custom logic must be disabled is deserialization (we do this automatically in this case). On the other hand, there can be some shared logic, that must operate even in this case - e.g. VersionValidator must still be able to detect version conflicts.

- "Normal" events are raised only when custom logic is enabled. This ensures normal services won't be operational in case when only system logic is enabled.

Other important changes related to Session type include:

Operations framework is redesigned to track and replay the operations in three modes:

- OperationLogType.SystemOperationsLog mode enables registration of system operations. This is the default mode now - mainly, because this mode is the simplest one.

- OperationLogType.OutermostOperationsLog mode enables registration of any outermost operations (user and system). Earlier operations framework was supporting just this mode. User operations aren't supported yet, but shortly this feature will be fully functional.

- OperationLogType.UndoOperationsLog mode - doesn't work yet, although there is pretty good basis to make it work soon as well.

This drawing explains the difference between first two modes:

DisconnectedState is extended as well. Now it:

So new DisconnectedState is much more usable in real-life scenarios, and further we're going to implement few more features that will significantly simplify building of WCF applications with it.

Schema upgrade \ mapping layer was extended by:

- Support for default field values: use DefaultValue property of FieldAttribute to specify it. This feature currently doesn't work for composite keys (references to entities with composite keys).

See issue 790.

- NullableOnUpgrade flag (in FieldAttribute as well). Useful, if you're upgrading nullable field to non-nullable - in this case you'll be able to access its NULL value in upgrade handlers, and e.g. replace them to some custom value (otherwise they'll be converted to DefaultValue or default(T)).

See issue 791.

Also, we significantly improved Domain.Build(...) performance in case of upgrade - especially, in Skip mode: currently it is 2...3 times faster then before.

- New VersionMode enumeration allows to specify if the fields must be included into entity version, and if it is necessary to update (increment, or what's appropriate - currently non-overridable VersionGenerator.Next method decides this, but we may change this in future) its value automatically when entity is changed for the first time in new (outermost) transaction.

- One of consequences, except better clearness: earlier it was impossible to use multiple [Version] fields requiring automatic update in the same persistent type. Now you can use them - even together with other ones.

Updates and fixes:

There were lots of changes - I won't enumerate all of them here, but the most important ones are related to stability of schema upgrade layer. As far as I can judge, currently we've fixed all important bugs there.

Another significantly improved part is our LINQ translator - lots of reported bugs (mostly related to pretty complex or rare scenarios) were fixed there as well.

Also, there was pretty old issue related to support 64-bit version of ODP.NET (Oracle Data Provider). Thanks god, it was eliminated by Oracle. ODAC 11.2.0.1.2 (the most current version, and the one used by and DataObjects.Net 4.3.5) includes Oracle.DataAccess.dll assembly built for AnyCPU, so it is possible to use it on 64-bit platforms as well.

Btw, I noticed they started to provide provides XCopy-deployable installer in this version - although, as it was discovered, this isn't a complete true. It's necessary to run a special .bat file that will actually copy and configure all the binaries, and do few other manual steps. But anyway, this is a good option.

Some of fixes and improvements:

Changes in samples:

- OrderAccounting sample was significantly refactored - mainly, because of new DisconnectedState features and SessionOptions.AllowSwitching. It is much simpler now, but there are still few ugly places. May be the most annoying issue related to WCF support is necessity to manually include/exclude the entities from view model-related collections (normally - ObservableCollections acting as data sources for grids and lists; although our EntitySets support WPF notifications, they can be rarely used there directly) after creation or removal of such entities. Obviously, this can be fully automated with our rich set of events, and that's what we must do quite soon here.

- WCF sample was also significantly updated - by the same reason.

- All our web samples (as well as web project template) are currently migrated to new ASP.NET 4 integrated mode web.config, but this brought mainly negative side effects. That's the only serious issue for beginners related to this version I know so far; likely, we'll switch to classic mode web.configs soon.

During last few weeks I've been working really hard - that's why I constantly avoided posting any new info here - even although v4.3.5 was published. Another reason is my dissatisfaction in new DataObjects.Net v4.3.5 installer - I've been replacing it during may be each 3 days starting from the beginning of September, and there are still some things to improve there.

I'm not happy just because of installer - the version of DO bundled there is definitely better then v4.3.1, i.e. if you use v4.3.1, it's strongly recommended to upgrade.

But if you're just trying DO, this version might bring some new complexities. In this version, I migrated all our web projects to new web.config for IIS 7 / ASP.NET 4 with integrated pipeline - I thought it's a good idea, since that's what recommended. And this become a source of a set of problems:

- New web projects can be hosted only by IIS 7 - not by ASP.NET Development Server.

- You must install additional components to even open such projects in VS2010/2008.

- You must be logged in as administrator to work with such projects. I'm curious, why Microsoft doesn't fix such issues ASAP?

- And finally, impersonation doesn't work in this mode. So you should enable ASP.NET 4.0 application pool identity to login to "DO40-Tests" database on SQL Server.

All these issues aren't fixed yet. I suspect, the only good way is to switch back to classic pipeline mode. On the other hand, few other annoying issues related to DO4 installation (like need to manually create "DO40-Tests" database and manually update connection URLs in samples) should anyway be fixed. That's why we're going to spent nearest 2 weeks on improving initial user experience. Some steps are already done - e.g. newer releases will include help files, that can be integrated into VS2010 Help Library:

And certainly, we'll work on manual - all new features (and some existing) must be described there.

P.S. Today I'll publish one more post describing the improvements we've made in v4.3.5.

This post actually starts a sequence of posts dedicated to DataObjects.Net design - more precisely, to its design goals. I decided to start the cycle from the practical example, since picture frequently worth more then thousands of words. Certain amount of advertisement of our outsourcing team is just side effect of this post, although if have some serious project for these guys, you're welcome.

SeverRegionGaz is regional natural gas provider. This is a big organization, that, although being a subdivision of Gazprom itself, has a set of branches as well. There is a bunch of legacy software systems there, varying from pretty old to new. Some of them have very similar functionality: earlier SeverRegionGaz branches were independent from each other, and thus they use partially equivalent software.

Most of data they maintain is related to:

- Billing - obviously.

- Equipment. E.g. they know exactly what's installed at each particular location (home, office, etc.).

- Incidents and customer interaction history.

- Bookkeeping. Unfortunately for us, they wanted to see certain information from two different instances of 1C Enterprise 7.5 there as well.

The main goal of "Single Window" system is to provide a single access point allowing to browse all this data, and, importantly, search for any piece of information there.

Let me illustrate the importance of this goal: earlier, to find necessary piece of information (e.g. billing and interaction history for a particular person), they should identify one or few of these legacy systems first, and then request necessary information from appropriate people (nearly no one precisely knows all these systems). In short, getting the information was really complex and long process -- and we were happy to change this.

There were few other, minor goals - e.g. it was necessary to:

- Provide web interface allowing customers to report the values of natural gas consumption counters and interact with support staff.

- Integrate with external payment processing system provided by their bank and automatically process the payments made by customers.

- Implement reporting. Only a part of reports needed by SeverRegionGaz was available in legacy systems, so we should implement the missing ones.

To stress this, we should develop an application allowing to browse really huge database (I'll explain this later). Its editing capabilities should be pretty limited - mainly, because:

- Most of this information must be imported from external sources. If we'd be asked to support editing, it would bring the complexity to a completely different level. In fact, we should either be capable of syncing back all the changed (that's really hard, taking into account that none of legacy systems is ready for syncing, and almost no one knows how these systems exactly work at all), or, alternatively, replace all the legacy systems there by our own (hard as well).

- That fact that different systems are still necessary for editing (mainly, data entry) is acceptable for SeverRegionGaz. The people working with them are used to them; single person there normally deals with a single system. Having "Single Window" there, they should study just one more system to be capable of accessing all the data - that's much better then ~10.

- Full editing support (likely, a complete replacement of most of legacy systems) is primary goal for "Single Window v2" - and the idea of movement to this big goal step-by-step is really good. For now it's ok if we allow to edit only the data we fully control.

That was a story behind "Single Window" project. Now some facts about its implementation:

- Time: 7 months -- February 2010 ... July 2010. First 1.5 months were spent almost completely on specifications.

- People: initially -- 3.5 developers + 1.5 managers (Alex Ustinov was playing both roles there); closer to completion -- 6 developers.

- Database: ~ 12 GB of data, 507 tables, 440 types! (all are unique, i.e. there are no generic-based tables)

- External data sources: 8, full data import is implemented for all of them; in additional, continuous change migration is implemented for 3 of them.

- Other elements: hundreds of lists, forms and reports. I suspect, totally - almost 1 thousand.

- Complexities: lots of, but mostly they were related to ETL processes, starting from some funny ones and ending up with real problems.

- Used technologies:

- DataObjects.Net 4 - btw, it's our first really big application based on it. And that's why I write this post :)

- LiveUI - it would be really hard to generate that huge UI without this framework. Btw, Alex Ilyin adopted its core part to WPF pretty fast. We also intensively used T4 to

- WCF - we've implemented 3-tier architecture relying on WCF as communication protocol.

- WPF - an obvious choice for UI,

and lots of other stuff, ending up with pretty exotic COM+ (used for integration with 1C).

Screenshots

The main tab is designed in very minimalistic fashion:

That's what happens when user hits "Search" button:

As you see, we use full-text capabilities of DO4 in full power here. In fact, we index all of objects, which content is interesting from the point of search. Full-text indexing here is implemented in nearly the same way as it was in v3.9 - i.e. there is a single special type for full text document, per-type full-text content extractors running continuously in background, and so on.

Here is a typical list (take a look at grid settings and search box):

Some forms:

The list of actions in left panel is actually pretty long - i.e. you see may be 30% of it. There is no scrollbar, but you may find "Up" and "Down" arrows indicating the list will automatically scroll up or down when mouse pointer approaches its top and bottom edges.

Integration services control list:

Web site, customer's home page:

Pages for registration of natural gas consumption counter value and interaction with support staff:

And finally, a single screenshot exposing the complexity of domain model:

As you see, our team made a huge job, that is directly related to DataObjects.Net.

The main point of this post is: DataObjects.Net is designed to develop really complex business applications fast. Why? Well, that's the topic for my subsequent posts, but for now I'd like to touch key points:

- Code-only approach allows developers to work fully independently without caring about schema changes at all - even in different branches.

- Integrated schema upgrade capabilities are ideal for unit testing (and testing in general). It's easy to launch unit tests for any part of your application.

- Rich event and interaction model simplifies development of shared logic, such as full-text indexing and change tracking.

- Excellent LINQ support brings significant advantages, when you start writing reports. Queries there might require really good translator.

- Can you imagine dealing with 500+ types in EF? Actually, I just tried to find some reports about this on the web, but found only "avoid this" statements, with tons of reasons. The most funny one is about performance and usability of IntelliSence when you type "dataContext.".

To be frank, of course I got lots of issue reports during these months from our "Single Window" team, and actually, still get them. Mostly they were related to LINQ and schema upgrade. So if you use DO, you can say "thanks" to Alex Ilyin - he simply tortured me and Alexis Kochetov, and still does this. E.g. mainly because of him:

- DO4 translates really complex LINQ expressions involving DTOs (custom types and anonymous typs).

- Schema upgrade works really well and fast now. Domain.Build(...) performance is 2-3 times higher there (Alex hates waiting for launch). E.g. now our 440-type Domain requires ~ 4 seconds to be built in Skip mode on my moderate office PC (Core 2 Duo). To achieve this, I should parallelize some stages of build process.

Let me finish with one more screenshot:

P.S. I'm really interested in examples of complex applications relying on popular ORM tools. If you know some, please share the link. I got an impression that people still avoid using ORM tools in such cases (there are opinions like "ORM is not for enterprise!"). So I'd like to "measure the length" in terms of model complexity (count of tables, types, etc.) - just for fun, of course. You should know we like to measure various features of ORM tools.

|

|