Friday, August 28, 2009DataObjects.Net code coverage

I just published an article describing DataObjects.Net code coverage in its Wiki. You can download full coverage report there as well.

Labels:

DataObjects.Net,

tests

Thursday, August 27, 2009Upcoming update: disconnected state, über-batching, future queries, object versions, prefetch API, locking, Oracle support

I wrote that after June's release of v4.0 final DataObjects.Net development must go much faster. Earlier we were busy with LINQ (simply hell!), and recently we proved our implementation is not just mature enough. From many points it better than LINQ in EF.

Anyway, now we our hands are free from this. And here is a brief description of what we develop right now: 1. Disconnected state Later I'm going dedicate a separate post for description of this feature. Moreover, in several days days I'll be ready to publish an example of its usage, since significant part of its logic is already implemented. Disconnected state, in fact, turns the Session into NHibernate or EF-like Session/DataContext:

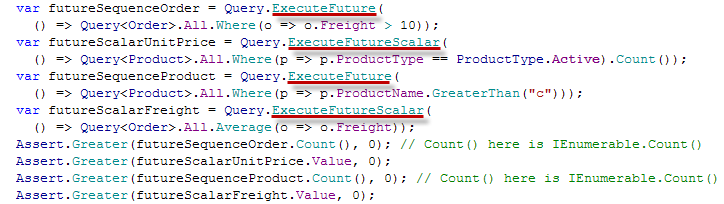

DisconnectedState can be attached / detached to a Session (any Session!) at any moment of time between its logical transactions; moreover, it is fully serializable. When DisconnectedState can be used?

2. Über-batching It's actually quite simple: we already implemented almost everything I described in my previous post. The only part left unimplemented is parallel batch execution. Although you may notice we already implemented AsyncProcessor in Xtensive.Core.Threading. So the age of über-über-batching is near :) New batching is already integrated into the latest nightly build. Shortly we'll publish v4.0.6, that will deliver it along with few other features described here. 3. Future queries This is one of features of generalized batching, so it is also already implemented. Working code from our unit tests:  So this will be available in v4.0.6. Btw, have you noticed all future queries are compiled ones? :) 4. Object versions As many of you know, DataObjects.Net v1.X-3.X were exposing "unified" Id and VersionId properties. First one was playing a role of "unified" key (Int64), and the second one - of "unified" version (Int32). And this approach worked - of course, if custom key or version were not necessary. DataObjects.Net v4.0 provides new Key concept:

5. Prefetch (eager loading) API This part is in "deep development" now, so I can provide only rough information. We expect it will be more or less complete in the middle of September. All the API based on several overloads of IQueryable.Prefetch extension method. Prefetch API is fully based on expressions, so there are no any text-based prefetch paths. Finally, it will be possible to choose eager load mode for a particular prefetch path:

Locking closes one of the gaps we had: there were no Lock-like methods. Now this part is implemented ideally: there is a group of IQueryable.Lock extension methods allowing to specify:

7. Oracle support This looks a bit fully, but... Its provider made us to implement much more specific adjustments for it in comparison to e.g. PostgreSQL. The worst thing is that it does not allow to perform a correlated subquery when a nested subquery references a column from a table referred to a parent statement any number of levels above the subquery; instead, it allow to reference a column from table referred by a parent statement only. More precise description of this "feature" can be found here. Funny, but they specially disabled it - such queries worked well in 10g Release 1 (10.1), but later Oracle developers disabled this. Frankly speaking, I don't remember anyone else doing something similar - presence of this feature lead to no any disadvantages! I'm curious, do they really think all the queries sent to Oracle are written & optimized by humans? Anyway, now about 150 out of ~ 1000 tests fail on Oracle, and this happens mainly because of mentioned issue. Everything else seems working. If we'll be able to workaround this issue in the nearest days, you will be able to see Oracle provider in v4.0.7. At this moment my brief description of our short-term plans is finished. I hope you like the direction and speed we maintain. Sunday, August 23, 2009Generalized batching

In this post I'll describe statement batching techniques used in DataObjects.Net. I'll describe a bit more than we already have - to give you a full picture of what you must find in the nearest update.

So what batching is? Batching is a way of reducing chattiness between RDBMS and its client. Normally client sends each command individually - DbCommand.Execute and similar methods are designed to return a single result immediately; moreover, DbConnection is not thread safe object. So there are some issues. Let's think what can be done to approach them. 1. ADO.NET batching support Note: we don't use this approach in DO4. I'm describe it here to provide full overview of possible approaches. ADO.NET has built-in batching support, but currently this feature is exposed only as feature of SqlDataAdapter and OracleDataAdapter. Its underlying implementation is actually generic, but as it frequently happens with Microsoft, it is not exposed as public part of API. On the other hand, there are workarounds allowing to utilize it. ADO.NET batching is implemented on communication protocol level: you shouldn't modify command text (e.g. set unique parameter names) to utilize it. Its another important advantage is possibility to get DML exacution result with ease. Its main disadvantages are: - You must use either internal API (SqlCommandSet class), or rely on SqlDataAdapter (must be slower; moreover, you lose all the genericity with it) - Currently this works only with SQL Server. The same should work for Oracle as well, but it appears no one has tried to even test similar part of its internal API. Finally, this approach ensures the sequence of commands executed on SQL Server will be exactly the same, since ADO.NET batching is implemented on protocol level, and this implies few other consequences: - SQL Server won't try to reuse cached plan for the whole batch. Because there is simply no "whole batch". So it appears this approach is better, if we constantly send different batches: SQL Server is simply upable to reuse the plan in this case. Likely, this was very important until appearance of SQL Server 2005: it caches plans both for batches and individual statements there. - On the other hand, it is worse when we constantly send very similar batches: although SQL Server could re-use their plans, it can't, because there are no real batches. - There is a little bit of extra cost in the server per-RPC execution in this case. If you're interested in further details, refer to: - Good description of ADO.NET batching from ADO.NET team (rather old - May 2005) - Plan caching in SQL Server 2008 2. Manual batching support That's actually the most obvious solution: combine multiple SQL statements into a single command. DO4 currently relies on this approach. Its main advantage is compatibility with most of databases. Probably, there are others (e.g. faster command processing because of plan caching for the whole batch), but they're very database dependent. Our current tests for SQL Server shows significantly better results in comparison to ADO.NET batching; on the other hand, these tests are ideal for batch plan caching. So further we'll work on more realistic tests related to this part. Here are typical DataObjects.Net batch requests (SQL was beautified with a tool):   This way is a bit more complex for implementation: - You must be able to build each individual statement in the batch quickly. Taking into account parameter names must be unique there, this becomes a bit tricky. In our case this part is handled solely by SQL DOM: it implements caching of generated SQL for DOM branches, so in fact only first compilation of each SQL DOM request model is complex (actually it is pretty cheap anyway), but subsequent compilations of request with minor modifications of its model are extremally fast. - You must decide how to deal with errors. I'll describe how we deal with them in further posts. - Some databases (e.g. Oracle) require special constructions to be used for batches ("BEGIN" ... "END"). - If you batch not just CUD statements, everything becomes even more complex: although you can access each subsequent query result with DbDataReader.NextResult method, you must decide how to pass it to the code requiring it. In our case this makes everything more complex, since we initially relied on MARS, but in this case you can use MARS for the last batch query result only (others must be processed prior to it). Generalized batching Until now we were discussion just CUD (create, update, delete) sequence batching. Some of ORM tools on the market implement it replying either on the first (NHibernate) or on the second (DataObjects.Net) approach. But is this all where the batching can be used? No. Let's start from very simple example: var customer1 = new Customer() {...} var customer2 = new Customer() {...} foreach (var entity in Query As you see, there are two CUD operations and a single query. And all these operations can be executed as a single batch. But AFAIK, none of ORM tools implements even this simple case. Why? Actually, because their API makes this not so obvious for ORM developers: - Normally there is a method flushing all cached changes. In case with DataObjects.Net this method is Session.Persist(). - This method guarantees all the changes are flushed on its successfull completion. - Query API relies on such method to ensure all the changes are flushed before query execution. As you see, such a logic makes this really less obvious: persist and query APIs are completely different, although in this case they must be partially intagrated. Let's think how this could be performed on better API: - Session.Persist(PersistOption.QueueOnly) ensures all the current changes are queued in low-level persist/query pipeline. - This method is called before execution of any query. - When query is executed, it is actually appended as the last statement in the last queued batch, if this is possible. So Session.Persist(PersistOption.QueueOnly) completion means ORM framework has promised to persist all the changes before the next query (or transaction commit), instead of actual guarantee all the changes are already persisted. That's what generalized batching is: batch everything that is possible to batch, but not just CUD operations. It's interesting to calculate the effect of generalized batching from the point of chattines (number of network roundtrips): - No batching: 1 (open transaction) + CudOperationCount + QueryCount + 1 (commit). - CUD batching: 1 (open transaction) + CudBatchCount (~= CudOperationCount/3...25) + QueryCount + 1 (commit). - Generalized batching: 1 (open transaction) + SeparateCudBatchCount (~= 0!) + QueryCount + 1 (commit). Huge effect. * SeparateCudBatchCount is count of CUD batch parts without queries. Since batch size is limited, they'll appear when the number of CUD operations between queries is large enough (~ >25). Likely, it will be close to zero in most of real life cases. Generalized batching and transaction boundaries As you see, it's possible to eliminate at least one more database roundtrip: opening a transaction. In fact, this command could be send as part of the first batch. Initially this looks attractive... On the other hand, this makes impossible to use DbTransaction objects, as well as intagrate with System.Transactions. Moreover, duration of a single transaction is normally at least ~ 1/100...200 of second, although single network roundtrip is ~ 1/10000 of second. So it looks like it's better to forget about this optimization. Generalized batching and future queries Some ORM frameworks implement future queries (e.g. NHibernate): - When future query is sceduled for execution, ORM guarants it will be executed later, and will ensure its result will be available on attempt to get it (enumerate it or get its scalar value). - This allows ORM to execute such a query as part of a single batch containing other similar queries. So there will be single netowork roundtrip instead of multiple ones. Future queries can be integrated with generalized batching very well. Let's look on content of typical batch in this case. There will be: - Optionally, CUD operations - Optionally, future queries - Query that lead to batch execution (either future query or a regular one). And the total count of roundtrips is: - Generalized batching w. future queries: 1 (open transaction) + SeparateCudBatchCount (~= 0!) + NonFutureQueryCount + 1 (commit). But which queries can normally be executed as future ones? In general, all the queries that aren't "separated" by CUD operations can be executed as future queries! So if you're mainly reading the data, future queries are right for you. Generalized batching makes them quite useful in data modification scenarios as well. Parallel batch execution Earlier you've seen I proposed to use Session.Persist(PersistOption.QueueOnly) - it ensures all the current changes are queued in low-level persist/query pipeline. Obviously, it may not just prepare the batches for later execution, but start a background thread execution "completed" batches from this queue as well. E.g. if we created 110 objects, and batch size is 25, invocation of this method must lead to creation of 5 batches. First 4 of them must be completed, but the last one containing 10 statements is not. If parallel batch execution is implemented, we'll start to execute first batch from our queue in background immediately; and later - the next ones. Obviously, this implies lots of complexities. Some of them are: - Synchronization on queue and underlying DbConnection - Careful error reporting: if error occurs during background batch processing, we must ensure it will be passed to the user thread. On the other hand, all of them can be resolved. Main benefit of this approach is that user thread won't wait while its changes are persisted. It can work to produce the next batch. And I think this is important: this may significantly reduce the duration of transactions in bulk operations. As you know, presence of ORM makes them noticeable longer (e.g. without this feature a time we spend on building the batches increases it; but if they're executed in parallel, we simply "flood" database with the job). Parallel batch execution in combination with generalized batching must bring nearly the performance of SqlBulkCopy to ORM based solutions. Hopefully, I'll be able to prove this on tests further. I'm pretty optimistics here, since v4.0.5 with its CUD-only batching seems to be just 1.5 times slower than SqlBulkCopy. Autmoatic background flushes Currently DataObjects.Net automatically calls Session.Persist() when the number of modified, but not persisted objects becomes greater than 500. We'll replace this to Session.Persist(PersistOption.QueueOnly), and likely, will decrease the flush limit to ~ 100-200. So in general it won't be necessary to manually call Session.Persist at all. Automatic background flushes will be a default feature. Batching in DataObjects.Net

As I've mentioned, publicly available v4.0.5 implements just CUD sequence batching by manually composing the commands of multiple statements. This works pretty well - tests approve this (I'm showing the results here, since we decided to do not publish DataObjects.Net scores @ ORMBattle.NET until it will be accepted by the people): DataObjects.Net (batch size is 25): Create Multiple: 20105 op/s Update Multiple: 35018 op/s Remove Multiple: 42542 op/s NHibernate (ADO.NET batching is on, batch size is 25): Create Multiple: 12617 op/s Update Multiple: 17148 op/s Remove Multiple: 18510 op/s Now we're working on everything else described in this post. I hope to show a complete solution till the middle of September. Such a solution for DataObjects.Net is even more attractive: as you know, it is designed to completely abstract the database. I'd like to mention just one example where such bulk operation performance must be necessary: we have a generic schema upgrade API. Renaming and copying of fields and classes are among its very base features; in the end of all you can implement custom upgrade. And we did all to allow you to use the same ORM API even here: we expose old structures as "recycled" persistent types accessible during a special Domain.Build phase along with new ones, so you can copy/transform their data using the same way as if you'd do this in runtime, and thus keeping your upgrade routines fully database independent. And as we know in practice, performance here is of huge importance. ORM performance: what's next? Next time I'll discuss materialization in DataObjects.Net - we really did a lot to compete well with materialization of simple entities in other frameworks (EF). Finally, I'll describe what you can expect from us further: parallelized materialization.

Labels:

DataObjects.Net,

performance

Wednesday, August 19, 2009Issues with DataObjects.Net v4.0.5 on Windows 7

We discovered v4.0.5 installer suffers from the following issues on Windows 7:

- Shows "published unknown" warning (just ignore it) - Can't install assemblies into GAC if you aren't logged in as local Administrator. I.e. if you are logged in as user from "Domain Admins" group, it won't work anyway. So it's necessary to log in as use from local "Administrators" group to install it.

Labels:

DataObjects.Net,

issues

Saturday, August 15, 2009DataObjects.Net v4.0.5 is out

Labels:

DataObjects.Net,

releases

Wednesday, August 12, 2009ORMBattle.NET is unofficially launched

Check it out: http://ormbattle.net

ORMBattle.NET is devoted to direct ORM comparison. We compare quality of essential features of well-known ORM products for .NET framework, so this web site might help you to:

P.S. DataObjects.Net v4.0.5, which is tested there, is the most current version we have. It will be released tomorrow or the day after - along with official launch of ORMBattle.NET.

Labels:

announcements,

comparison,

DataObjects.Net,

performance

Saturday, August 01, 2009

DataContext vs Query

|

SubscriptionBlog Archive

Labels

|

News, examples, tips, ideas and plans.

Thoughts around ORM, .NET and SQL databases.

|

|