Anyway, now we our hands are free from this. And here is a brief description of what we develop right now:

1. Disconnected state

Later I'm going dedicate a separate post for description of this feature. Moreover, in several days days I'll be ready to publish an example of its usage, since significant part of its logic is already implemented.

Disconnected state, in fact, turns the Session into NHibernate or EF-like Session/DataContext:

- Anything it fetches will be truly stored there, rather than cached, while DisconnectedState is attached to the Session. "Truly stored" means Session won't try to update the state of each cached Entity in each subsequent transaction. So as you see, attached DisconnectedState "blocks" the ability of Session to automatically reflect the most current state from the storage.

- Attached DisconnectedState "blocks" the ability of Session to automatically flush the changes to the storage - they will be flushed by explicit request only. Although there will be two options allowing to automatize this: AcceptChangesOnQuery and AcceptChangesOnCommit.

- So attached DisconnectedState affects on queries. If changes aren't accepted before a particular query, its result might differ from the expected one by default.

- Since DisconnectedState stored the data acquired in past and allow to change it, optimistic version checks are performed when these changes are persisted. See the description of "object versions" feature for further details.

- Attached DisconnectedState affects on transactions. When a transaction starts in the Session with attached DisconnectedState, in fact, this is logical transaction. But it might lead to actual database transaction, if it will hit the database (so everything is nearly the same as when SessionOptions.AutoShortenTransactions is on). Each logical transaction (with physical one, if it is already attached to it) can be either committed or rolled back.

- Web applications almost never need DisconnectedState at all.

- The same is correct for web services. They are stateless.

DisconnectedState can be attached / detached to a Session (any Session!) at any moment of time between its logical transactions; moreover, it is fully serializable.

When DisconnectedState can be used?

- As local cache in WPF client applications. After getting this implemented, we can honestly say we fully support WPF.

- As long-running transaction state cache in ASP.NET applications. So it can be useful e.g. on wizard pages.

- It is DisconnectedState itself, as well as its own ancestor of ChainingSessionHandler. When DisconnectedState is attached to a Session, it replaces its SessionHandler to its own. So in fact, all the ditry job is done on SessionHandler level.

- This implies we've added an API allowing to temporarily replace SessionHandler of any Session. This API is available via CoreServicesAncestor - there is ChangeSessionHandler method now. This API is secure: it can be utilized only by SessionBound types from assemblies registered in the Domain current Session belongs to.

- Finally, we've extended SessionHandler by a set of methods necessary to implement DisconnectedState. In fact, we gathered all interception points we need there.

2. Über-batching

It's actually quite simple: we already implemented almost everything I described in my previous post. The only part left unimplemented is parallel batch execution. Although you may notice we already implemented AsyncProcessor in Xtensive.Core.Threading.

So the age of über-über-batching is near :)

New batching is already integrated into the latest nightly build. Shortly we'll publish v4.0.6, that will deliver it along with few other features described here.

3. Future queries

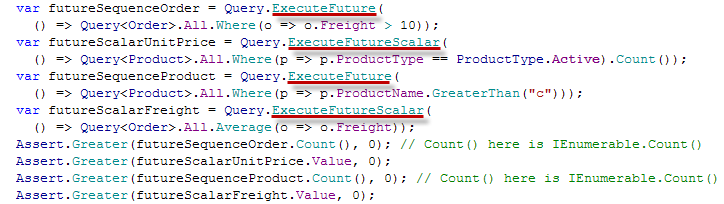

This is one of features of generalized batching, so it is also already implemented. Working code from our unit tests:

So this will be available in v4.0.6. Btw, have you noticed all future queries are compiled ones? :)

4. Object versions

As many of you know, DataObjects.Net v1.X-3.X were exposing "unified" Id and VersionId properties. First one was playing a role of "unified" key (Int64), and the second one - of "unified" version (Int32). And this approach worked - of course, if custom key or version were not necessary.

DataObjects.Net v4.0 provides new Key concept:

- Key structure is defined on the root type of each hierarchy. Or, better, hierarchy is defined by its root type, and such a root type must explicitly define key structure for the whole hierarchy. HierarchyInfo, TypeInfo and KeyInfo types from Xtensive.Storage.Model describe these concepts in runtime.

- Any Entity exposes Key property of Key type. Persistent properties composing the key are exposed as well; we require them to be read-only.

- Key is assigned to each Entity when it is created, and further it can not be changed. By default we provide two protected constructors for each Entity allowing to assign either manually specified key, or automatically generated key. Both ways can be used together - you must simply provide two or more constructors. Moreover, we provide secure API allowing to create an entity of any type with manually specified key (PersistentAccessor).

- You can extract key value (Tuple) from each Key by its Value property

- Key can be created by defining its value (Tuple) and TypeInfo using Key.Create methods

- Keys can be compared for equality. And this is really fast: they cache hash code; moreover, if hierarchy includes more than one type, we maintain identity map for its keys (actually, there is global LRU cache) to ensure we track known entity types for each key. Thus key comparison for such hierarchies is actually handled as reference comparison.

- Keys can be resolved via Query.Single* methods

- Keys can be used in LINQ queries (compared, passed as parameters and so on)

- Keys can be converted to and from string represenation. Key.Format and Key.Parse methods handle this.

- You can declare persistent properties of Key type. Such fields are stored as strings in the storage; on the other hand, they allow to reference any perisstent type.

- NHibernate does not provide any similar concept.

- ADO.NET Entity Framework provides similar EntityKey type.

- Entity.GetVersion method returning VersionInfo type provides access to unified version (current, actual or original).

- Version is declared by the same way as Key: just mark some persistent property (or properties) by [Version] attribute. If you mark nothing, we consider version is formed from all the fields except lazy load fields. If hierarchy contains multiple types, it's possible that we won't be able to build version because some non lazy load fields declared in descendants aren't loaded. An exception is thrown on attempt to get version of such an instance (i.e. you must ensure every field participating in version is loaded before it is accessed).

- VersionInfo objects (actually, structs) can be compared for equality. If you do this repeatedly, this is fast: they cache hash codes.

- You can build VersionInfo from Tuple, and vice versa.

- Query.Get/CheckVersions methods allow to get/check the stored versions of specified entities. They rely on future queries & batching, thus their involvement makes updates with optimistic version checks ~ 2 times slower than regular ones. Taking into account the fact that our regular updates are ~ 1.5 times faster than almost everywhere else, this is very good result ;)

- EntityState now have an additional flag (IsStale) indicating if it is stale or not. Stale EntityState implies an optimistic version check must be performed when it is persisted to the storage.

5. Prefetch (eager loading) API

This part is in "deep development" now, so I can provide only rough information. We expect it will be more or less complete in the middle of September. All the API based on several overloads of IQueryable.Prefetch extension method.

Prefetch API is fully based on expressions, so there are no any text-based prefetch paths.

Finally, it will be possible to choose eager load mode for a particular prefetch path:

- Joins (no additional queries, but likely, huge client-server traffic)

- Joins + future queries (smallest traffic, higher load on RDBMS)

- Future queries (small traffic, but more additional queries)

Locking closes one of the gaps we had: there were no Lock-like methods.

Now this part is implemented ideally: there is a group of IQueryable.Lock extension methods allowing to specify:

- LockMode: Shared, Update or Exclusive

- LockBehavior: Wait, ThrowIfLocked, Skip.

7. Oracle support

This looks a bit fully, but... Its provider made us to implement much more specific adjustments for it in comparison to e.g. PostgreSQL. The worst thing is that it does not allow to perform a correlated subquery when a nested subquery references a column from a table referred to a parent statement any number of levels above the subquery; instead, it allow to reference a column from table referred by a parent statement only.

More precise description of this "feature" can be found here. Funny, but they specially disabled it - such queries worked well in 10g Release 1 (10.1), but later Oracle developers disabled this. Frankly speaking, I don't remember anyone else doing something similar - presence of this feature lead to no any disadvantages!

I'm curious, do they really think all the queries sent to Oracle are written & optimized by humans?

Anyway, now about 150 out of ~ 1000 tests fail on Oracle, and this happens mainly because of mentioned issue. Everything else seems working. If we'll be able to workaround this issue in the nearest days, you will be able to see Oracle provider in v4.0.7.

At this moment my brief description of our short-term plans is finished. I hope you like the direction and speed we maintain.

No comments:

Post a Comment